There are 2 DS running on MC 14.4.4 that manages 4200 Android devices.

According to Advanced configuration we have set DS server priorities different for a subset of devices. It guides half of devices to connect in DS1 and half in DS2.

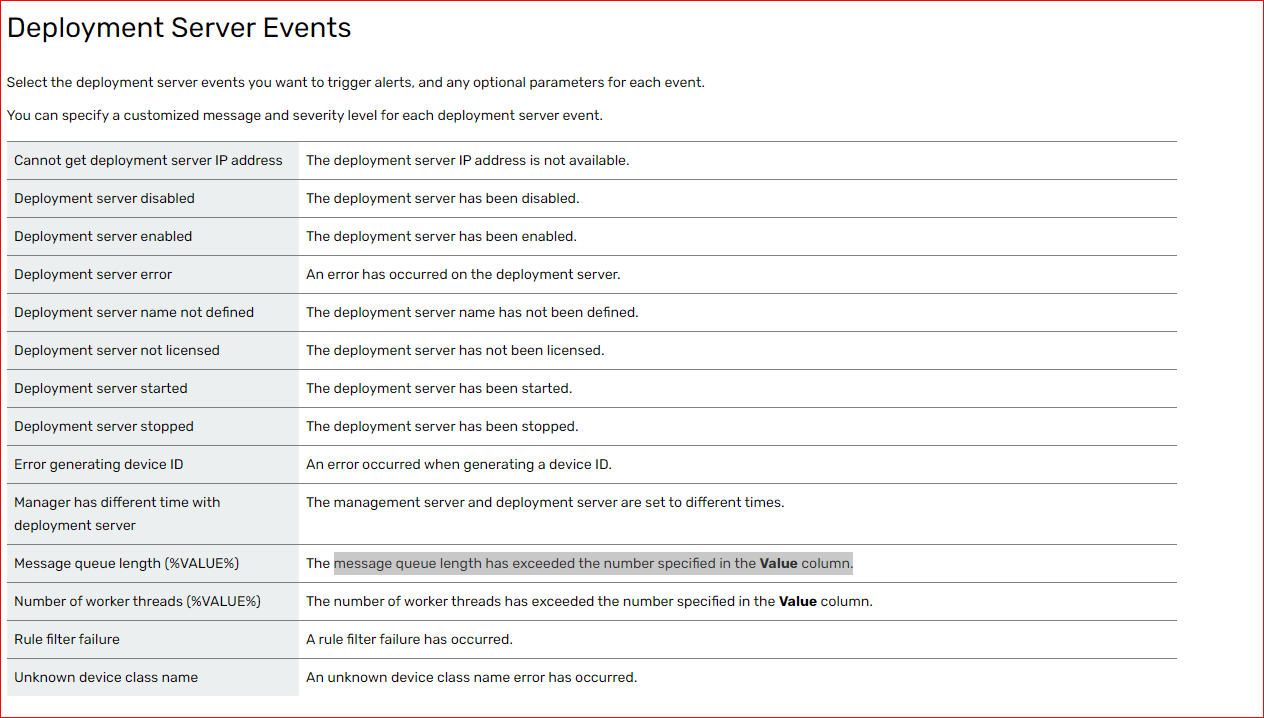

One of the DS is always with many itens queued (around 1000) and other is almost none.

We have tried to invert the server priorities and the behavior of queue size follow it.

Both subset of devices has rules and profiles locked by kiosks.

Is there any way to see what kind of task is in the DS queue? In positive way, we would be able to review the rule/profile or split the subset in a different way.